Machine vision over the network

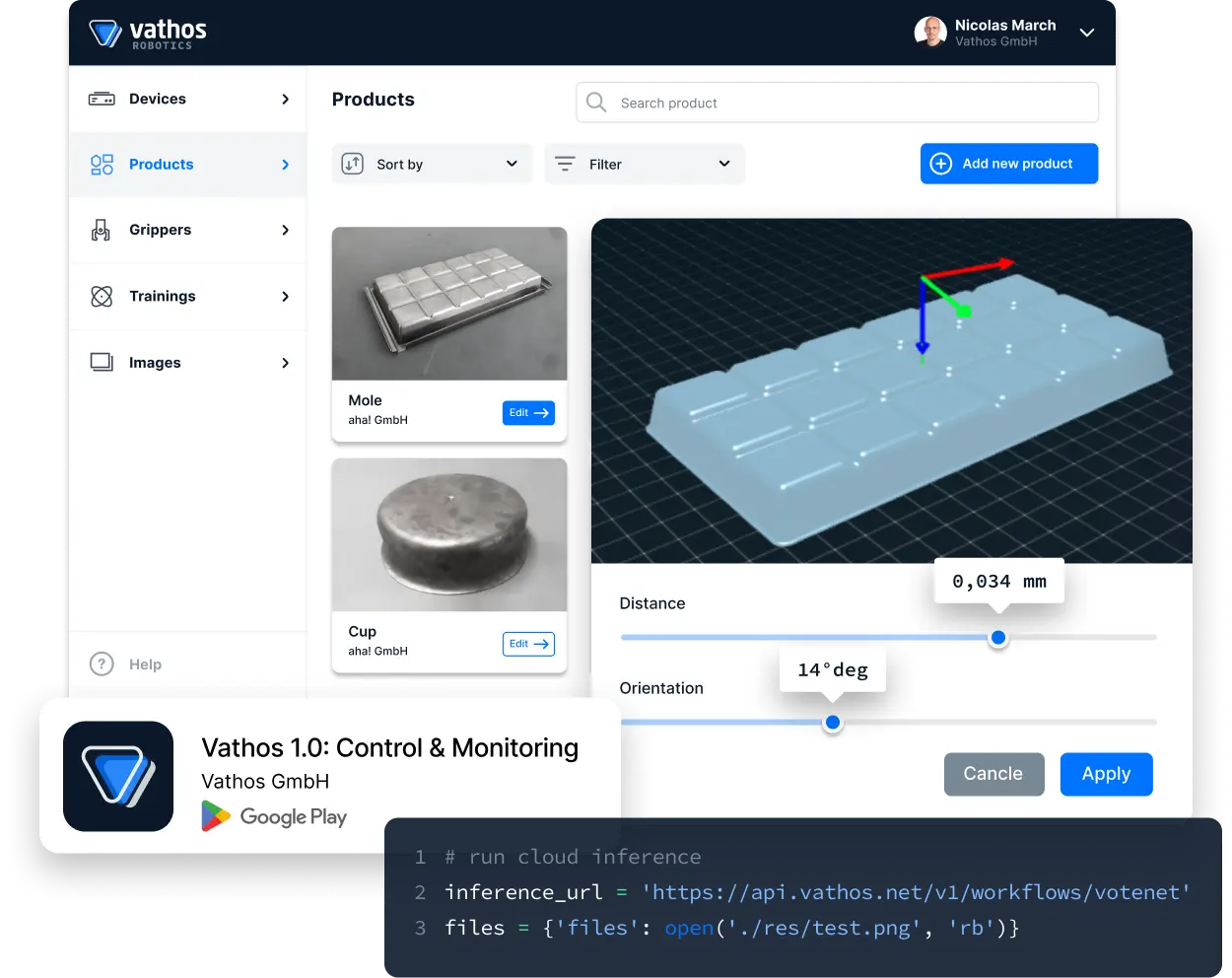

Our service-oriented API

The interface provides an extensive library of machine vision functions, which can be invoked by making requests over a network on the shop floor or the internet.

# Automatic teach-in

# Few parameters

# IoT-ready

VATHOS

cloud_inference.py

100% = 6/18 ln : 4

1

2

3

4

5

6

# run cloud inference

inference_url = 'https://api.gke.vathos.net/workflow/camera'

files = {'files': open('./res/test.png', 'rb')}

values = { 'product': json.dumps(product),

'configuration': json.(configuration)}

tlc = perf_counter()

What makes our API different

Connectivity — the key to success

Never touch a running system seems to be the creed of classic machine vision, that is, deploying solutions that run on a single device and in as much isolation as possible, except for communication with the central process control (robot controller or PLC). But we live at the brink of an era of artificial intelligence, in which massive amounts of data need to be exchanged, stored, and processed on suitable infrastructure. The development of our API evolves around the idea that everything is connected, and that data and workloads can move freely between different instances with significant ramifications on how machine vision is integrated and operated today.

Improved reliability and ease of use compared to isolated machine vision solutions

Automatic learning

from synthetic training data

Easy setup

requiring a minimal number of parameters

Continuous optimization

through learning during live operation

Modern algorithms

Our API provides state-of-the-art algorithms for classification, localization, and calibration based on images from 2D, 3D, and infrared cameras. Many of those rely on modern AI/machine learning aproaches, which — despite leaps of performance — suffer from the need to collect and process very large amounts of data. The distributed nature of the API allows us to seamlessly move workloads to the cloud while taking security concerns of our customers seriously.

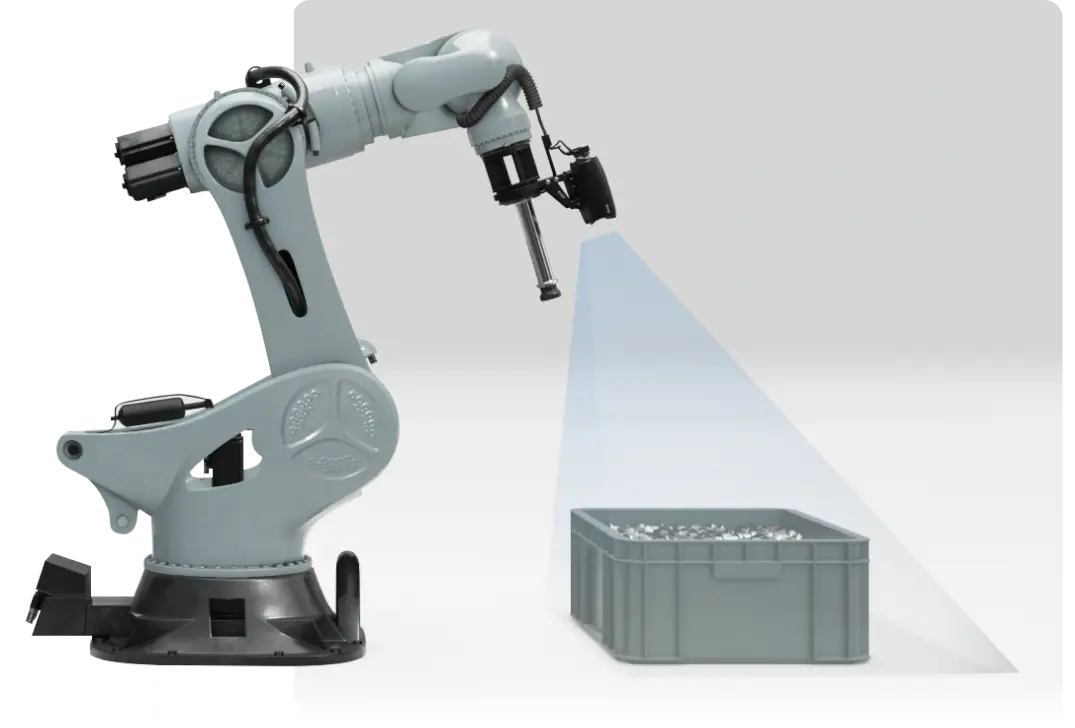

Hardware connectors

Typically, the automation task at hand determines the optimal choice of hardware for your project. To make integration as smooth as possible, we offer over-the-air delivery of software components (so-called connector services), functioning as the glue between the three main actors of the vision-guided robotic cell — sensor, edge device, and robot controller. With the click of a button, you can tap all API resources regardless of your choice of robot and camera brand.

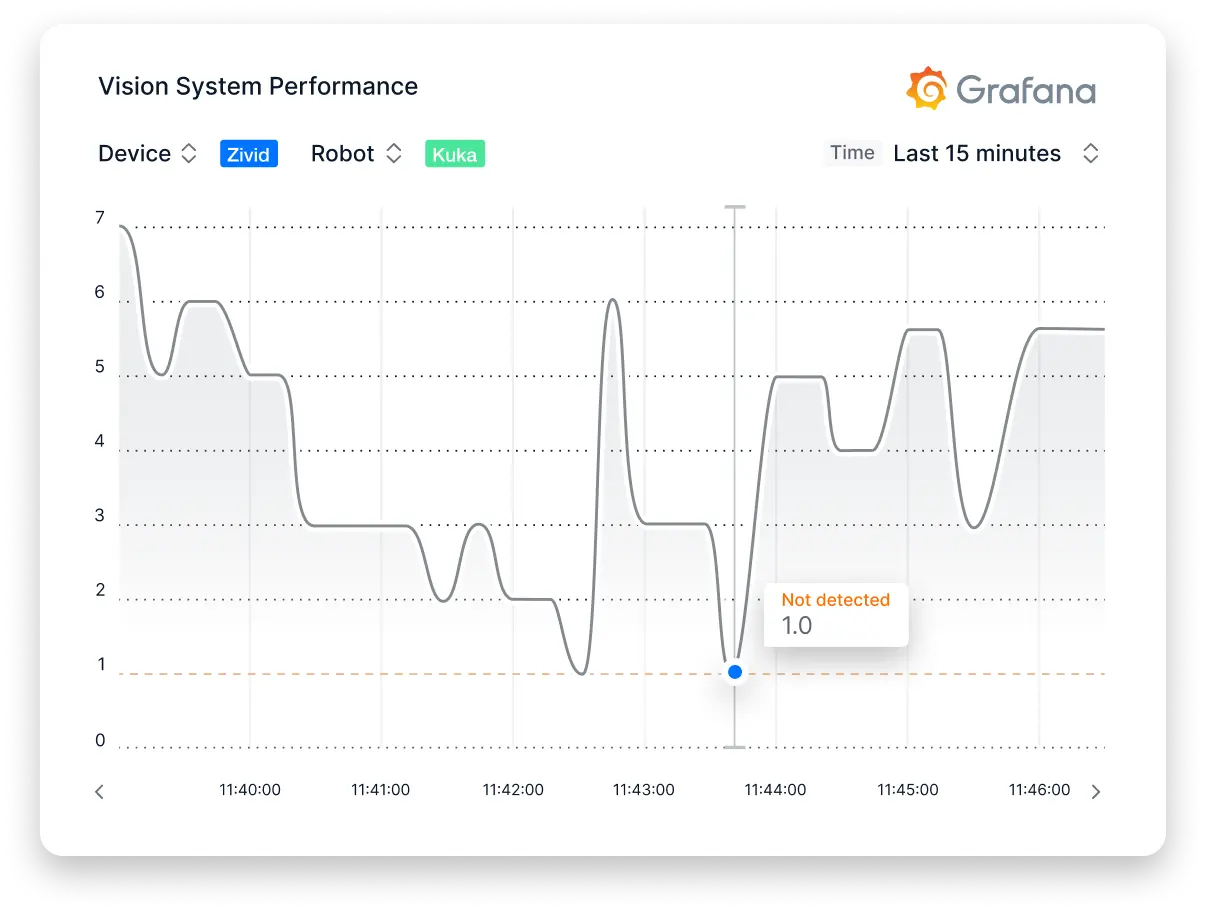

Remote monitoring

Our API turns any camera into an IoT device. Images not only reveal much about the state of the process they portray but also the condition of the vision system itself. Operators are alerted immediately about anomalies. Optimal parameter values can be chosen based on a holistic view of actual and historical data rather than snapshots of the current system state — from anywhere in the world.

Customizable user interface

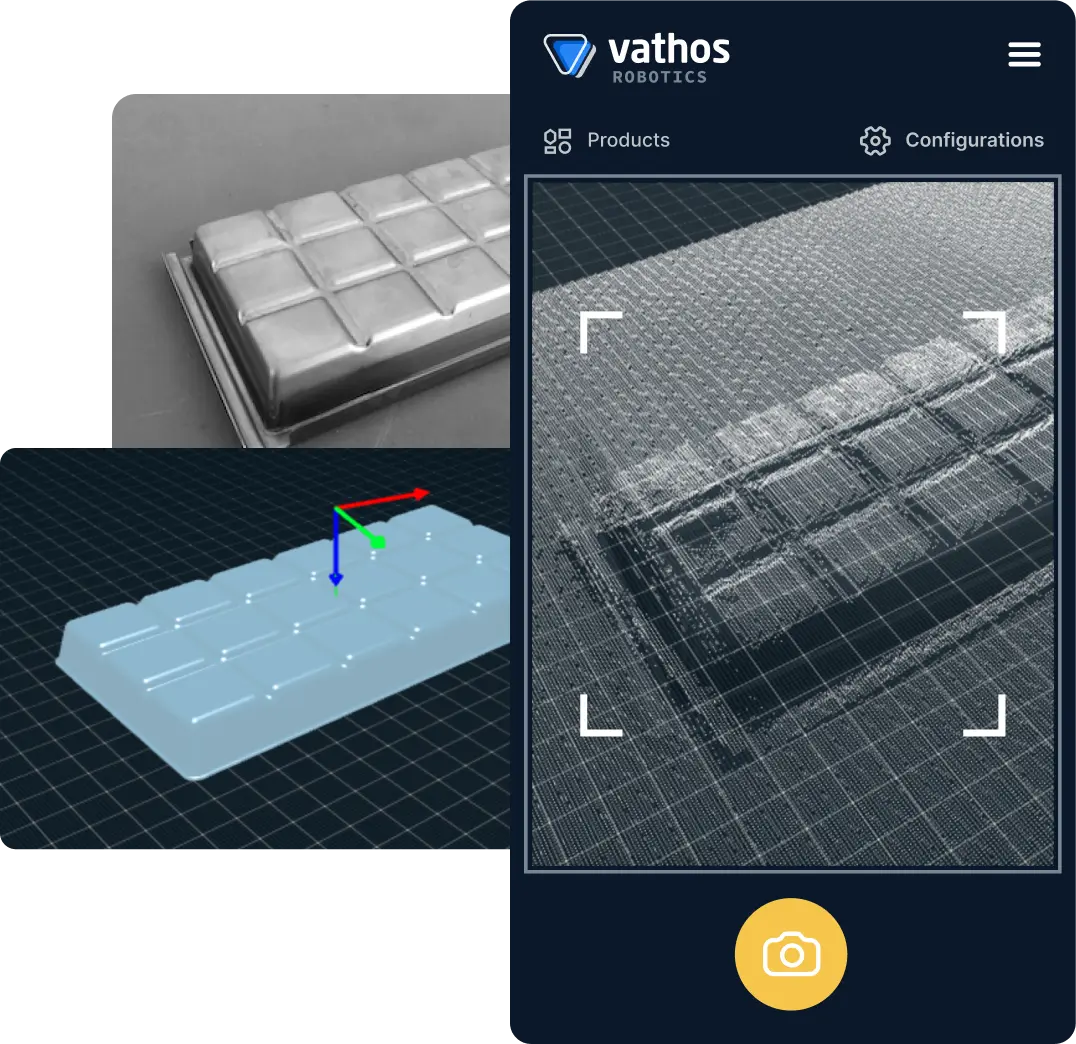

What makes a software easy to use largely depends on the requirements of the customer. Offering an API is perfectly in line with our headless-first strategy, i.e., building the best machine vision functionality for a broad audience and letting customers design and implement the user interface that is right for them. For the basic configuration and control of our most common services, we do provide rudimentary mobile apps on the Google Play store.

Continuous & automatic learning

To spare customers tedious labeling or teach-in tasks, all of our AI-based services are bootstrapped by means of synthetically generated data. The bi-directional connection between edge and cloud then establishes a closed loop for re-training based on real data collected during the initial operation. Thanks to the distributed infrastructure underlying the API, our patent-pending algorithms get better and better over time — and without any manual input from a human operator.