Robot Vision

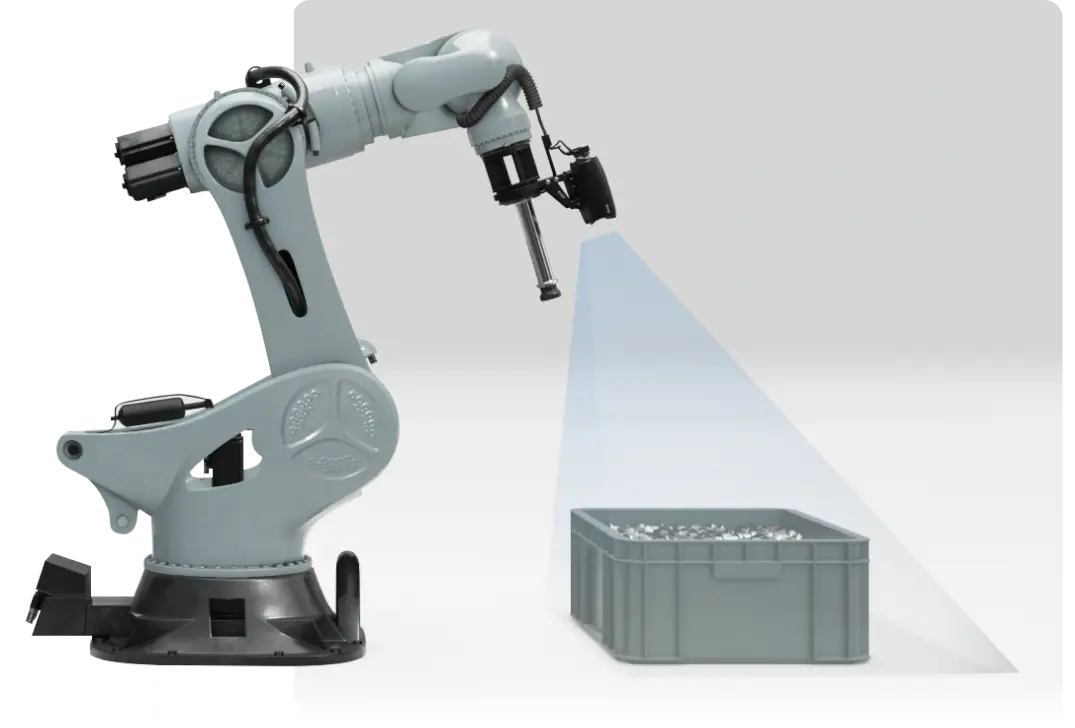

Camera-guided Robotics

Our software enables every integrator to use machine vision easily and reliably for

complex pick & place applications

complex pick & place applications

# IoT-ready

# Few parameters

# No manual training

UR10e

detect_bearing.script

100% = 6/18 ln : 4

1

2

3

4

# run object detection

vathos = rpc_factory("xmlrpc","http://ipc.local:30000/v1/xmlrpc")

vathos.trigger("binpick", True)

Computer Vision

AI-based object detection

For years, the use of machine vision for camera-guided robotics was reserved for experts. By using data-driven processes (“AI”), any integrator can implement complex applications — reliably and in significantly less time.

Improved reliability and ease of use compared to isolated machine vision solutions

Automatic learning

from synthetic training data

Easy setup

requiring a minimal number of parameters

Continuous optimization

through learning during live operation

Modern algorithms

Our software provides not only one recognition model, but several different ones that can be combined. This enables us to solve machine loading from boxes, depalletizing of mixed pallets, sorting objects, assembly, and much more.

Works with almost any hardware

The software supports all common 3D imaging sensors and robots on the market. The connectors can be switched on and off with a single click, allowing the hardware to be replaced via plug & play. Thanks to the use of OPC-UA, there are no limits to connectivity with common PLCs (Siemens, Bosch, Beckhoff, Rockwell, etc.).

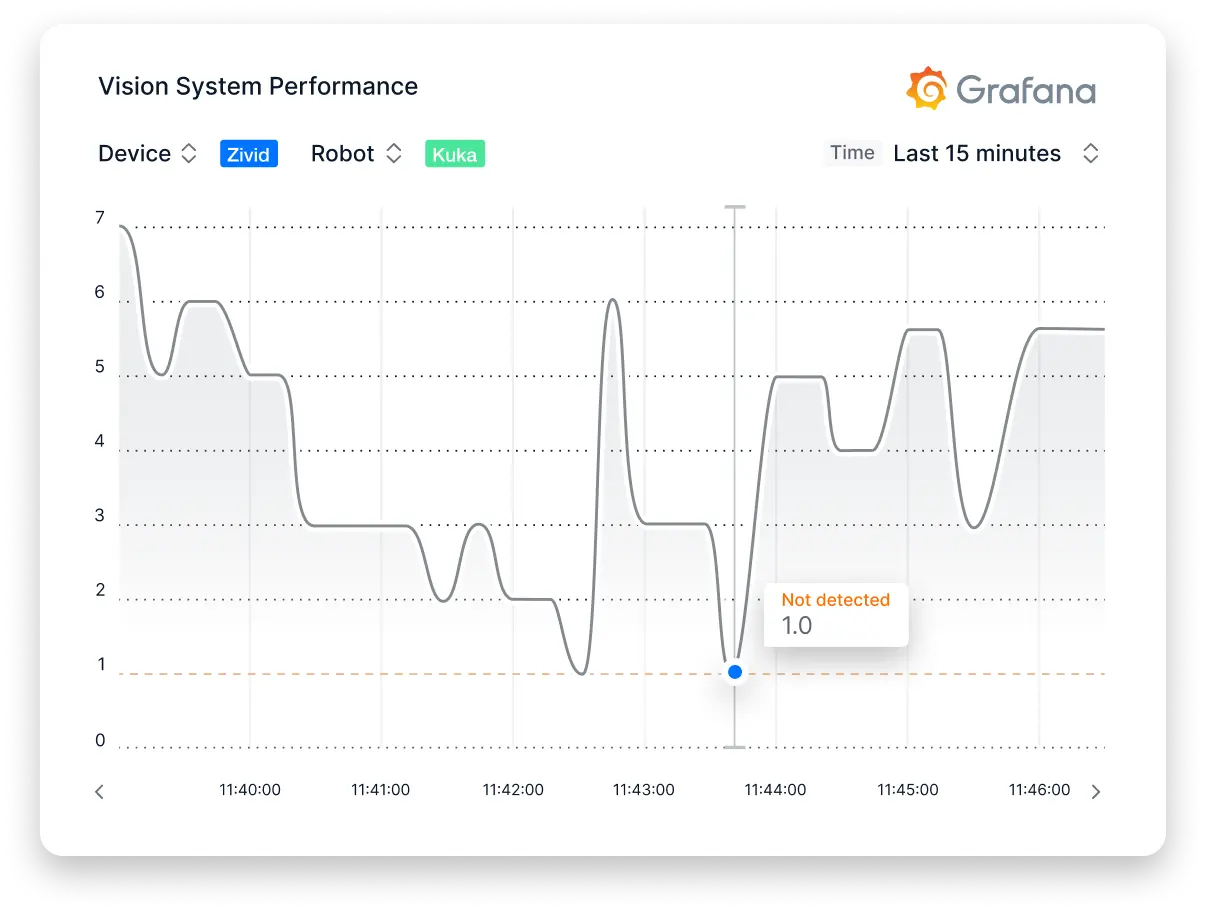

Remote monitoring

Our API turns any camera into an IoT device. Images not only reveal much about the state of the process they portray but also the condition of the vision system itself. Operators are alerted immediately about anomalies. Optimal parameter values can be chosen based on a holistic view of actual and historical data rather than snapshots of the current system state — from anywhere in the world.

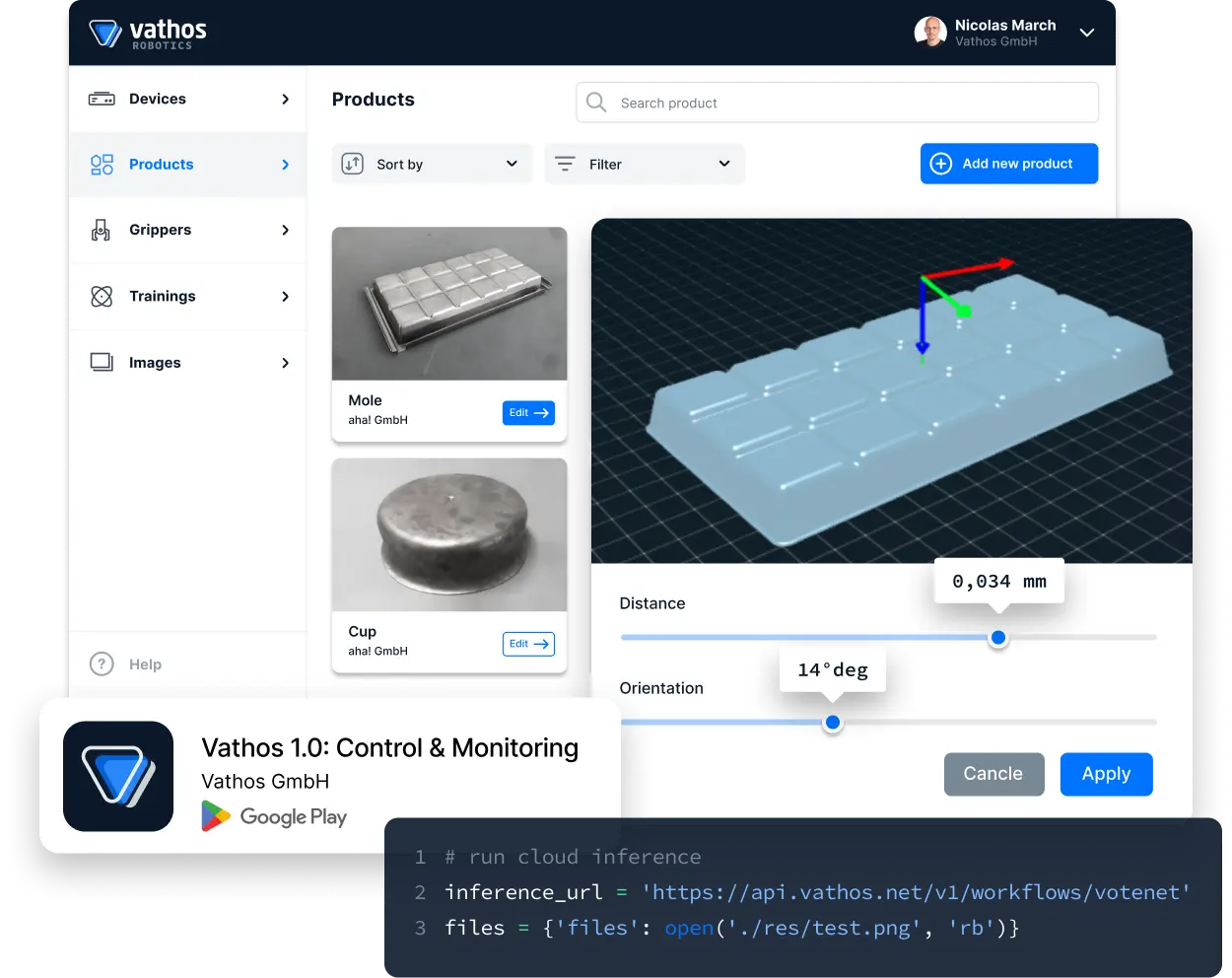

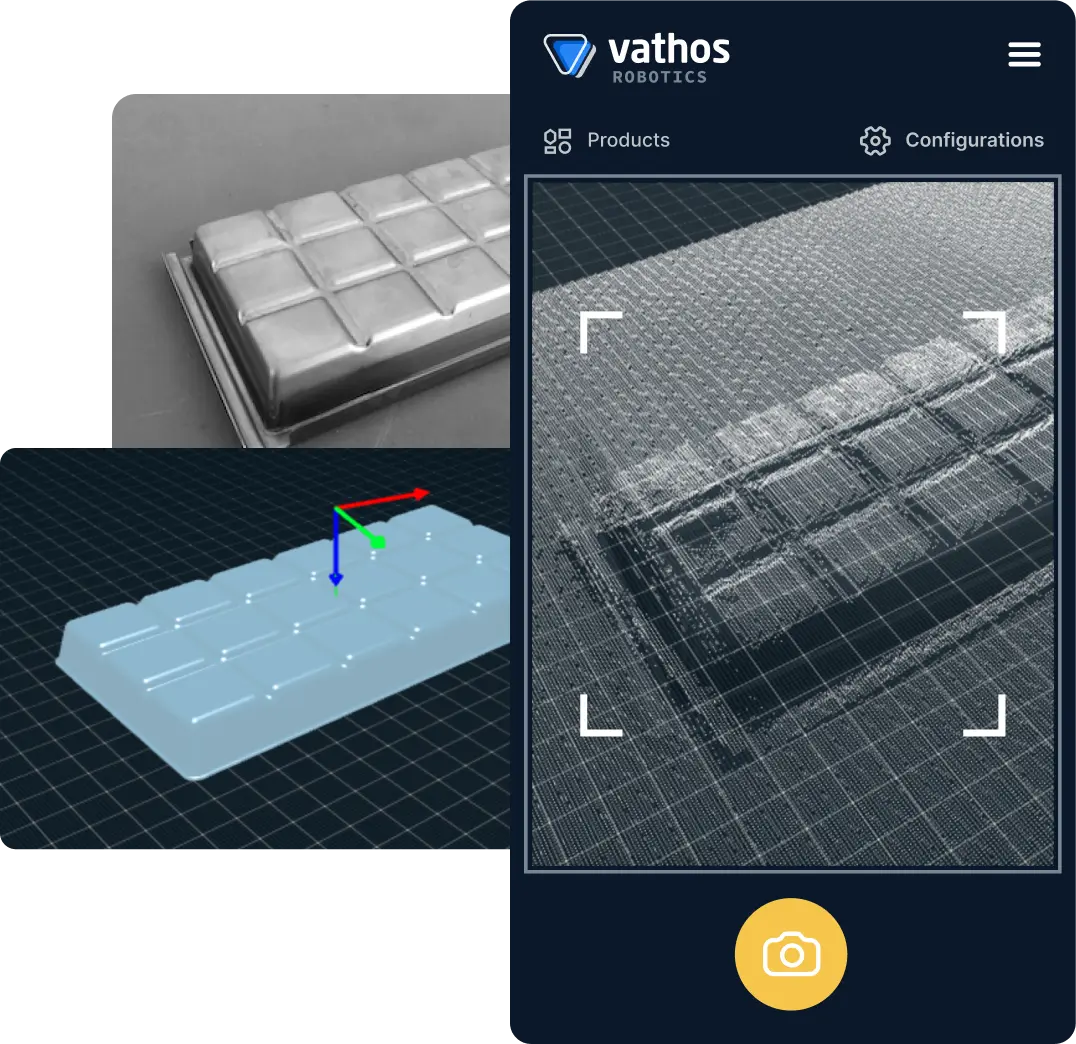

Control via code or user interface

The software can be conveniently configured and monitored using any standard browser — whether on a notebook, tablet, HMI, or even a smartphone. The software's API concept allows the full control via program code as an alternative. This enables OEM customers to integrate the software into their own solutions with minimal effort.

No manual training

AI systems typically require large amounts of data from which they can learn. Manually providing such data in image processing by “labeling” or “demonstrating” can be very time-consuming. We use concepts that eliminate the need for user training. In addition, the recognition models improve independently and continuously based on the production data.